0%

Ever wonder why your shiny new AI system feels like a sitting duck?

Here’s what the cybersecurity industry won’t admit: traditional security testing is basically useless against AI systems. AI security testing is the real way to uncover hidden vulnerabilities—we’re trying to protect rockets with bicycle locks.

AI pentesting—or more specifically, AI penetration testing —isn’t just regular security with fancy name—it’s a completely different beast. While your typical pentest pokes at networks and applications, AI pentesting digs into how machine learning models think, learn, and can be tricked.

Here’s the kicker: 82% of C-level executives say their business success depends on secure AI… but only 24% of generative AI projects actually include security. That’s like saying you care about fire safety while building houses out of matchsticks.

Even worse? 27% of organizations have outright banned GenAI because they’re too scared of the risks. Can you blame them?

Attackers don’t need to hack your database when they can just sweet-talk your model into spilling secrets. It’s not about brute force—it’s about manipulating behavior. AI systems face attacks that sound like science fiction but happen every day. And most companies aren’t even testing for them.

Forget everything you know about traditional pentests. SQL injection, cross-site scripting, misconfigured firewalls—they barely scratch the surface with AI. These systems aren’t just software—they’re decision-makers. And attackers can manipulate them without touching your infrastructure.

AI pentesting has one core goal: understand how your model thinks. This isn’t just coding—it’s psychology. Security experts map how AI receives input, makes decisions, and where vulnerabilities might hide.

Unlike classic pentests, AI-focused testing examines behavior, not just surrounding code. Threats like prompt injection, data poisoning, and model inversion aren’t buzzwords—they’re real risks that can subtly manipulate outputs.

Think of it this way: would you rather crack a vault or convince the guard to hand you the keys? AI attacks exploit influence, not brute force. By combining automation with human expertise, AI-powered pentesting delivers faster reconnaissance, deeper coverage, and sharper risk prioritization than traditional methods—turning security testing into a continuous, adaptive defense.

Because AI systems evolve constantly, testing must be continuous to stay ahead of new risks.

Experts who understand both AI and cybersecurity are scarce, making specialized testing even more critical.

"AI will not replace humans, but those who use AI will replace those who don't" - Ginni Rometty, Former CEO of IBM

AI isn’t just answering questions anymore—it’s making decisions, handling sensitive data, and driving revenue. That makes LLMs prime targets. Traditional pentests barely scratch the surface. LLM penetration testing focuses on uncovering prompt injection, model theft, and plugin vulnerabilities. Here’s what your team needs to focus on.

Prompt injection drives most AI breaches. Attackers don’t hack your database—they sweet-talk your model into spilling secrets.

Even heavily “protected” models? Jailbroken in under ten attempts. That’s not just a risk—it’s a flashing red alarm.

Plugins boost your AI—but also widen the attack surface. 64% of enterprise LLMs run at least one insecure plugin. Some grant “excessive agency,” letting your AI call APIs, manipulate data, or act without oversight.

Example: A finance chatbot accidentally exposed live transaction data via a PDF plugin. Designed for reporting—it became a breach.

Your model isn’t just code—it’s a multimillion-dollar asset. Model extraction attacks can steal $2–5 million in training investment. Open-source weights? Sometimes preloaded with hidden backdoors. Intellectual property and trust are on the line.

Attackers can drain budgets fast. Flood your model with complex prompts and watch a $0.03 API call balloon into $3.75. Usage spikes by 700–1200%. Repeat across thousands of calls and you’ve got a stealthy EDoS attack—no alerts, just rising costs and slower responses.

Beneath the headlines lie subtler attacks:

These aren’t bugs—they’re trust failures baked into the way AI learns.

Securing your LLM means covering both the obvious and the hidden. A thorough pentest maps vulnerabilities from prompts to plugins, from model theft to adversarial inputs—keeping your AI safe, reliable, and resilient.

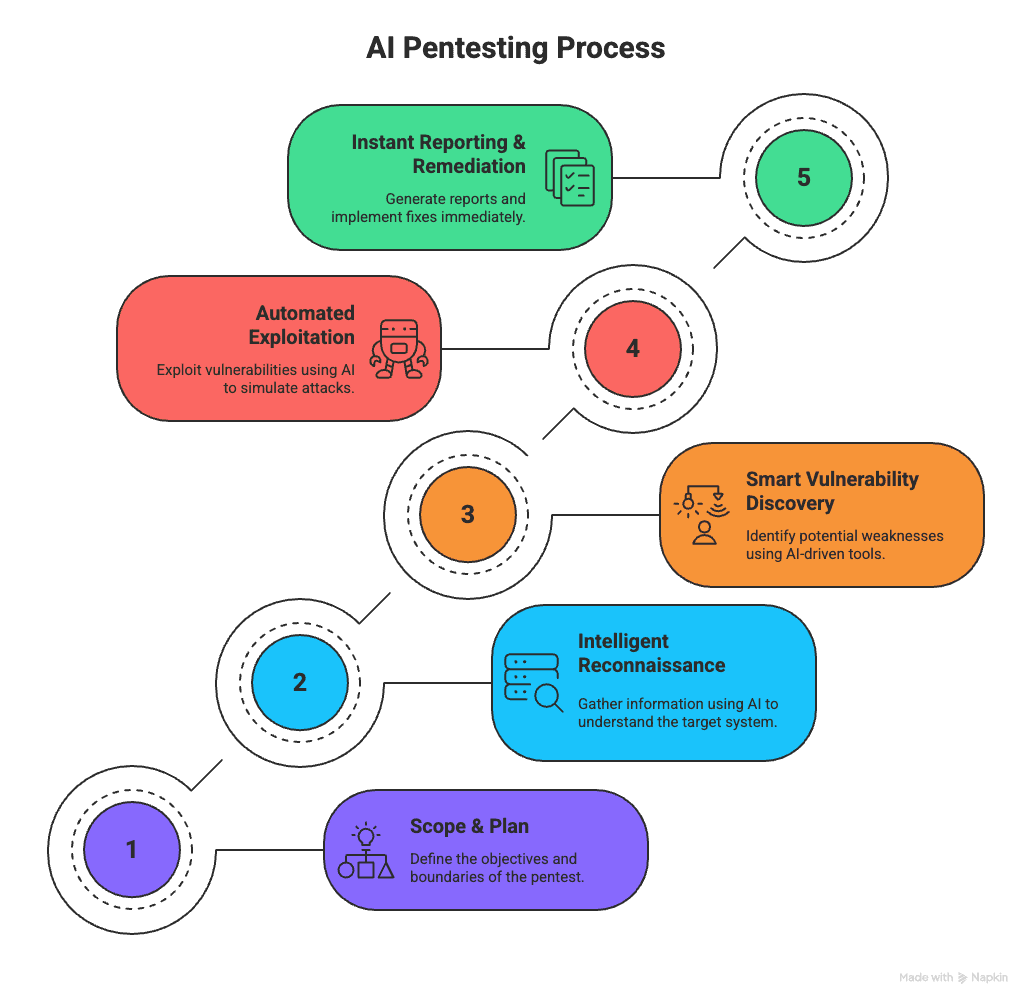

Pentesting AI isn’t a one-off check. It’s a full-cycle fight: automation plus human judgment, hunting and fixing real flaws fast. Think of it as a playbook that keeps learning. Here’s the runbook:

AI Pentesting Process

Now—here’s how each step actually plays out.

Start with the map and the mission. Decide targets, metrics, and kill switches. AI inventories cloud, on-prem, and model endpoints so nothing hides. Loop in engineering, ops, and legal early to lock priorities and rules of engagement.

Light up the whole surface. Machine learning tears through public data, repos, logs, and dark-web chatter. NLP stitches clues into a live attack map that updates as fresh intel drops.

Zero in on weaknesses that matter. Parallel scans hit networks, APIs, and models while ML kills false positives and ranks issues by exploitability and business impact—so your team fixes the real risks first.

Prove the threat—safely. Adaptive agents craft targeted payloads and pivot as defenses react. Human reviewers set kill switches and validate proof-of-concepts to keep the test sharp but controlled.

Close the loop fast. AI drafts prioritized fixes, opens tickets, and feeds them straight into CI/CD for automated retest. Retests confirm patches and sharpen the next run.

Run this loop quarterly—or nonstop for critical systems—and pentesting stops being a checkbox. It becomes a living shield. Start small. Iterate fast. Waiting costs more than acting now.

You know AI systems are vulnerable. Now what?

Time to arm yourself with tools built for the AI battlefield—not your standard network scanners.

These are the AI pen testing tools built specifically to uncover vulnerabilities in language models and AI-driven applications:

AI Pentesting Tools.png

Let’s get to know how each of them is used—and what makes them essential for securing modern AI systems.

Xbow

Xbow is an enterprise-grade red teaming platform designed specifically for AI systems. It’s built to simulate real-world attacks on language models, identify exploitable behaviors, and provide clear remediation paths.

What sets Xbow apart:

It’s used by top AI labs and Fortune 500s to test not just vulnerabilities—but resilience, too. If you're looking to run structured, repeatable attacks that simulate what real adversaries would do, Xbow delivers.

Mindgard

Born from 10+ years of UK university research, Mindgard is like a Swiss Army knife for AI security.

It integrates cleanly with CI/CD pipelines and supports MITRE/OWASP frameworks. Works with any AI model—even ChatGPT.

Garak

From NVIDIA, Garak is the nmap of LLMs. Lightweight, modular, and lethal.

Scans for:

It uses probes to generate inputs, detectors to assess responses, and logs everything from quick summaries to deep debug data. It’s open-source, so you can tweak it for your threat model.

Burp Suite

You know Burp Suite from web app testing—now meet its AI upgrade.

It extends your existing toolkit into AI territory without forcing a full relearn.

PentestGPT

What if GPT had a hacker mindset? That’s PentestGPT.

It’s a mentor, co-pilot, and engine rolled into one.

Wireshark

Old-school? Maybe. Still essential? Absolutely.

AI systems still use networks—and that’s where secrets leak.

Wireshark helps detect:

It runs on nearly every OS and delivers data in your format of choice. For catching network-layer weaknesses AI-specific tools miss, Wireshark is your silent guardian.

Bottom line: These tools don’t just patch holes—they help you understand how your AI can break and how to defend it before attackers do

AI pentesting looks flashy on paper—but reality hits differently. Beneath the dashboards and demos, real challenges are unfolding fast.

Most security teams weren’t built for AI. Firewalls and exploits? Sure. Transformers, embeddings, and model pipelines? Not so much. Globally, there’s a 4M+ talent gap. One in three tech pros admit they lack AI security skills, and 40% aren’t ready for AI adoption. Expertise is scarce—and without it, risks multiply quietly.

AI scanners aren’t magic. Bias in training data can blind them to real threats or make them cry wolf. The result? False alerts, missed vulnerabilities, and shaky trust in your security program.

AI tests raise questions no one wants to answer: who’s accountable if a model misbehaves—the vendor, the customer, or the engineer? Black-box models complicate everything. Without audit trails and explainable AI, you’re flying blind and courting liability.

Even seasoned teams stumble here. These challenges aren’t theoretical—they’re live, evolving, and fast. Address them early, or pay later.

An AI pentest isn’t a one-off checkbox—it’s a continuous game of discovery, adaptation, and defense. Follow these strategies to stay ahead:

Know what you’re testing. Model endpoints, APIs, data pipelines, and integrations should all be mapped. Include rules of engagement, success metrics, and kill switches before you start. Clarity saves time—and risk.

AI accelerates scans, identifies patterns, and filters false positives—but humans validate exploits, interpret behavior, and make judgment calls. This hybrid approach keeps tests both fast and precise.

Not all vulnerabilities matter equally. Rank risks by exploitability and business impact. Focus on what could actually harm your operations first, rather than chasing every minor issue.

AI systems evolve constantly. Quarterly—or even continuous—testing ensures new risks are caught before they become breaches. Tie testing into your DevSecOps pipeline for seamless retesting after patches or updates.

Every test should produce actionable reports, remediation guidance, and lessons learned. Feed these into CI/CD pipelines, model retraining, and future testing cycles. This turns each pentest into a learning loop.

By following these practices, AI pentesting becomes not just a test, but an evolving shield—keeping your models resilient, adaptive, and ready for new threats.

Here’s the truth: most AI security programs fail because they treat AI like regular software.

They’re not.

Organizations with structured AI testing detect 43% more vulnerabilities than those winging it. That’s not luck—that’s planning.

Stop buying tools just because the demo looks good. Use what fits your setup:

Match your tools to your threats:

The key is understanding what could go wrong in your specific AI setup—and choosing tools that address those risks.

Because it does.

AI systems evolve constantly, introducing new risks with every update. That’s why quarterly or continuous testing is essential—annual scans won’t cut it.

You should prioritize testing:

In healthcare and finance, where data sensitivity and compliance are critical, more frequent testing is a must. The cost of skipping it? Breaches, fines, and lost trust.

Security shouldn’t be an afterthought.

Integrate AI pentesting into your DevSecOps processes from the start:

Build testing into your pipeline, not around it. That’s how you stay ahead.

Smart orgs use PTaaS platforms to activate researchers instantly.

Don’t just have a security program. Make it work.

Traditional security isn’t enough anymore. Your AI systems aren’t just software—they make decisions, and attackers know exactly how to manipulate them. Most organizations are sleepwalking into disaster, unaware of the unique risks AI introduces.

Here’s what matters:

AI pentesting uncovers hidden vulnerabilities that conventional testing misses completely.

Specialized tools exist, but only deliver results when paired with strategy, skilled teams, and continuous evaluation.

Automated reporting and DevSecOps integration save time, reduce errors, and improve remediation speed.

The skills gap is huge: over 4 million cybersecurity positions remain unfilled globally, leaving organizations exposed.

Structured AI testing detects 43% more vulnerabilities compared to ad hoc, one-off assessments.

The AI security market is booming—from $1.7 billion in 2024 to a projected $3.9 billion by 2029. AI pentesting is not a one-off checkbox; it requires ongoing evaluation, adaptive playbooks, and proactive defense strategies to stay ahead of evolving threats.

The choice is clear: invest in AI pentesting now to safeguard your business and gain a competitive edge—or risk costly breaches and lost trust.

Stay alert. Stay tested. Stay protected.

#nothingtohide

Senior Security Consultant