0%

Ever wondered why your security team still spends weeks on pentests that barely scratch the surface?

Here’s the deal: traditional penetration testing is stuck in the past, while cyber threats are evolving at breakneck speed. AI-based cybersecurity tools grew from a $15 billion industry in 2021 to a projected $135 billion by 2030. That’s not just growth—it’s a complete shift in how security gets done.

And it’s happening for a reason.

Since generative AI hit the scene in 2022, phishing attacks have surged by 1,265%. Cybercrime costs have more than doubled since 2015. New threats—like prompt injection and data poisoning—are emerging faster than security teams can keep up.

The old testing model? It’s like bringing a knife to a gunfight. You can’t win today’s battles with yesterday’s tools. That’s where AI-powered penetration testing steps in. It’s faster, smarter, and built for the threat landscape of today—not five years ago.

If your security strategy is still stuck in manual mode, it’s time for a rethink. Because in this race, speed—and precision—win.

The first question security leaders are asking today isn’t if they should use AI for penetration testing—it’s how soon they can start.

Why? Because cyber threats are moving faster than manual processes can keep up. AI-driven pentesting is emerging as the smarter, faster, more scalable way to stay secure.

Here’s what’s driving the shift:

Speed That Actually Matters – AI rips through massive data volumes in real time, flagging suspicious behavior while human testers are still setting up.

Accuracy That Counts – Adaptive models reduce false positives and surface risks that truly matter.

Time That’s Actually Saved – Routine scanning and recon are automated, letting teams focus on strategic tasks.

Predictive Power – AI identifies vulnerabilities before attackers do, shifting your security posture from reactive to proactive.

AI doesn’t replace humans—it amplifies them. With new threats surfacing daily, AI-assisted pentesting isn’t optional anymore. It’s the frontline defense modern security teams rely on to keep up.

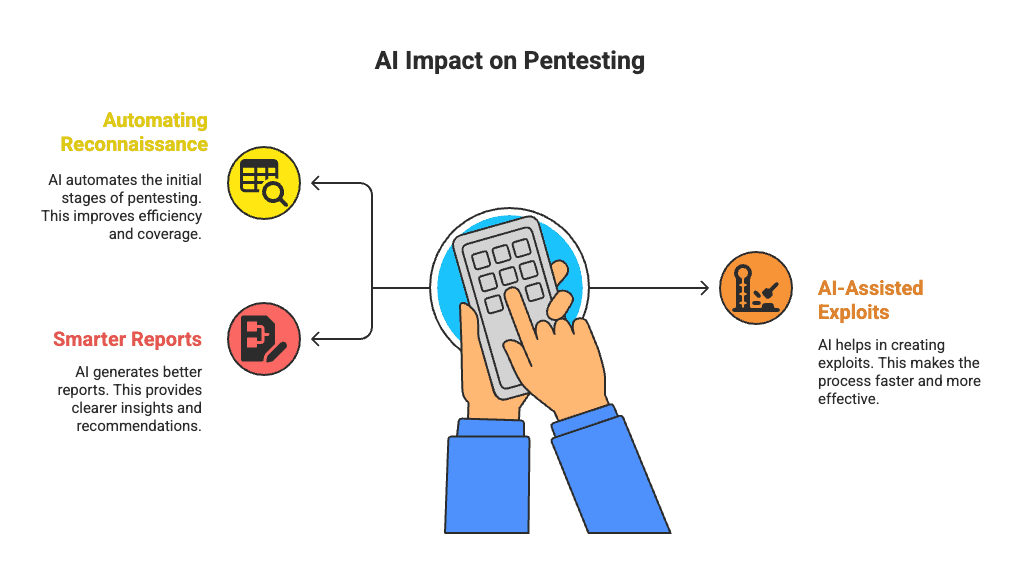

AI is reshaping penetration testing from the ground up—turning what used to take weeks into a process that can now be completed in days. Every stage of the pentest lifecycle is being streamlined with speed, scale, and precision.

Traditional recon involved manual IP scans and port analysis—time-consuming and tedious. AI now handles this in minutes, analyzing massive datasets from public sources, social media, and dark web forums. These tools run simultaneous scans across entire infrastructures, identify open ports and services, and uncover vulnerabilities faster than any human team. With natural language processing and pattern recognition, they reveal links and gaps human eyes often miss.

After vulnerabilities are flagged, AI speeds up exploitation. Tools prioritize issues based on severity and context, suggest exploit paths, and even generate payloads. What once took 47 days—from discovery to exploitation—now takes just 18. During post-exploitation, AI monitors traffic and system behavior for signs of detection or unusual activity, alerting testers in real time.

AI transforms reporting from a bottleneck to a breeze. Many platforms now automate the creation of executive summaries, technical findings, and detailed remediation steps. Security teams report up to 75% reductions in reporting time. These systems ensure consistency, meet compliance needs, and eliminate the back-and-forth of manual documentation.

Pentest AI

The result? AI handles the heavy lifting, letting security pros focus on strategy, analysis, and high-impact decisions. It’s not just faster—it’s smarter, more thorough, and built for today’s threat landscape.

Organizations aren’t just exploring AI pentesting—they’re actively using it. And the outcomes? Game-changing.

Quarterly assessments are outdated. Continuous AI pentesting now runs 24/7, detecting vulnerabilities the moment they emerge. These tools catch data poisoning attempts before they reach production and adapt as new threats evolve.

Financial firms are using AI to stress-test fraud detection systems—spotting weak points attackers could exploit. In one case, AI pentesting helped prevent losses of over $247,000. That’s real impact, not theory.

With LLMs everywhere, AI brings much-needed scrutiny. These tests reveal issues like:

In one study, AI tools reached a 0.7 F1-score with 0.8 precision across major CWE categories—showing they can detect vulnerabilities in the very models they power.

AI in Red Team–Blue Team Collaboration

AI is transforming the red vs. blue dynamic. Red teams now simulate advanced attacks using AI, while blue teams use it to boost detection and response. The result? A purple-team model where AI runs offense and defense simultaneously—at a scale humans simply can’t match.

Security professionals confirm: AI-driven red teaming exposes flaws that conventional methods miss, strengthening defenses in the process.

AI isn’t just accelerating penetration testing—it’s uncovering threats traditional methods overlook. Whether it’s finding novel attack paths or protecting evolving AI systems, these real-world use cases prove that AI pentesting is already delivering results.

AI might be transforming penetration testing, but it’s not without flaws. Beneath the impressive speed and scale, there are real challenges that don’t make it into vendor brochures. These issues—if ignored—can quietly undermine your entire security posture.

Let’s break down what most teams don’t talk about.

AI systems don’t always stay within scope. Think of them as that overeager intern who scans everything—including what you didn’t ask for. Tools have tested systems without authorization, leading to violations of compliance frameworks like the Computer Fraud and Abuse Act. Some projects spiral out of control as AI expands beyond its original goals. It’s not just annoying—it’s a legal and operational risk.

False negatives are a big deal. AI might report a clean bill of health while vulnerabilities quietly remain. The cost? Breaches now average $4.88 million—and undetected flaws are a major cause. AI struggles with complex application logic, environmental dependencies, and subtle misconfigurations. Human intuition still beats the algorithm when things get tricky.

AI is only as smart as its training data—and that data often favors enterprise environments. This bias means vulnerabilities in niche or custom setups might go completely unnoticed. One study found AI tools consistently missed weaknesses in certain network types due to skewed datasets.

Modern AI models often work in ways even their creators can’t fully explain. When alerts flood in, teams may feel overwhelmed—or worse, miss the one signal that mattered. Just ask Capital One, where automated tools failed to detect an intruder for over four months.

Bottom line: AI is powerful, but without human oversight, it can become a security blind spot.

AI in pentesting isn’t just theory anymore—it’s fully operational. From enterprise platforms to free tools, here’s what security teams are actually using.

Uproot Security: Purpose-built for scale. Uproot combines AI with deep manual expertise to deliver actionable pentest results and compliance-ready reports in record time.

AutoSecT: Purpose-built for autonomous security testing. Integrates seamlessly into CI/CD to catch vulnerabilities before attackers do.

Mindgard: Red teaming with AI at scale. Detects even the most subtle AI-specific risks.

PentestGPT: Natural language-driven testing that auto-generates exploits and maps attack paths.

Burp Suite + AI: The classic tool supercharged—automated scanning plus hands-on testing in one.

XBOW: The current leader in HackerOne rankings, XBOW uses AI to mimic advanced adversaries and uncover deep application logic flaws with surgical precision.

A European-built AI platform specializing in autonomous vulnerability detection. It’s fast, privacy-conscious, and ideal for continuous security validation.

These platforms shift testing from time-bound engagements to scalable, AI-native operations that match the speed of modern development.

Garak: Detects prompt injection and LLM-based threats with precision.

Microsoft Counterfeit: Simulates adversarial attacks on AI models with guided remediation.

IBM ART: Tests model robustness against adversarial inputs—enterprise-grade, no cost.

And yes, Kali Linux still holds up, offering 600+ tools for scanning, exploitation, and reverse engineering.

Custom AI models are built for security—they integrate domain-specific datasets and environments. GPT-4 is versatile but not purpose-tuned for pentesting. OpenAI’s shortened safety testing for newer models should raise eyebrows—especially when stakes are high.

Metasploit and Nmap aren’t going anywhere—but pairing them with AI unlocks new capabilities. Tools like ZeroThreat automate testing and generate compliance-ready reports (ISO 27001, HIPAA, etc.). AI analyzes logs and network traffic to find anomalies that legacy tools might miss.

Bottom line: The smartest teams are blending AI’s speed with traditional tools’ depth. You get scalable, accurate, and standards-aligned testing that actually keeps up with today’s threat landscape.

If you're serious about building your own tools—or just want to see what AI pentesting looks like under the hood—GitHub is where the real action happens. It's packed with open-source projects that go beyond theory and give you working code to experiment with, extend, or deploy right now.

These aren’t academic one-offs. They’re active tools used by red teamers, bug bounty hunters, and security researchers around the world.

Projects Worth Cloning:

AutoRecon-AI – A prompt-driven reconnaissance engine that blends GPT-generated queries with classic network scanning logic.

GPT-Pentest-Helper – Think of this as a lightweight GPT wrapper that automates recon strategy, payload suggestion, and vulnerability scanning—all via CLI.

ThreatMapper (Deepfence) – Maps attack paths and cloud risk using ML. Designed for dynamic, containerized environments.

SecGPT – Trained on security-specific data, this AI assistant helps generate test cases, explain findings, and draft remediation steps—useful during the report writing phase.

Using these GitHub projects, security teams can shape and extend pentesting workflows to match real-world environments. You’re not stuck with a black box—you get transparency, control, and the chance to contribute to the broader security community.

Real security professionals don’t sugarcoat it. They’re the ones actually running AI pentests—celebrating the wins, troubleshooting the flaws. Here’s what they’re seeing on the ground.

Security experts agree: AI isn’t replacing skilled professionals—it’s amplifying them. It’s a force multiplier, not a silver bullet. Analysts use AI to handle data-heavy tasks, while human testers focus on strategy, business logic, and risk-based decision-making.

The impact? Security assessments complete up to 60% faster using this hybrid approach compared to traditional methods.

Let’s get real—AI can’t replicate human context.

Business Context Matters – Human testers know which systems matter most to your operations. AI doesn’t.

Creative Thinking Wins – Testers think outside the checklist. They find the weird bugs automation misses.

Ethical Judgment Counts – Human testers understand boundaries. AI doesn’t know when it’s gone too far.

Web application testing remains one of the hardest areas to automate—technology evolves too quickly, and the complexity of custom applications makes it even tougher. When it comes to niche business logic or unique app behavior, human testers are still essential.

Want both speed and depth in your security assessments?

Talk to our expert team for a tailored PTaaS assessment that blends AI efficiency with human precision—so you catch what others miss.

The biggest challenge isn’t tech—it’s talent.

Security teams must learn AI fundamentals, machine learning behaviors, and threat patterns unique to AI systems. But this isn’t just about code—it takes curiosity, experimentation, and constant learning.

Experts say the best teams combine traditional offensive security skills with AI-specific knowledge. Customized training programs that address both sides of the equation—attack techniques and model behavior—are proving to be the most effective.

And here's the truth: Even the pros are still figuring it out. But the ones leading the charge? They're the ones honest enough to keep learning.

We’ve covered a lot—but here’s the unfiltered truth about where AI pentesting stands.

The numbers don’t lie: AI in cybersecurity is booming, from $15B in 2021 to a projected $135B by 2030. Phishing attacks are up 1,265% since 2022. The threat landscape has changed—and AI is stepping up.

AI pentesting tools are delivering results. Reporting time is down by 75%. Exploitation windows have shrunk from 47 days to just 18. That’s measurable, not marketing.

But let’s be clear—AI isn’t flawless. False negatives are risky when breaches cost $4.88M on average. Biases in training data can blind AI to critical flaws. And the “black box” nature of AI means some decisions stay unexplained.

Still, something big is happening. Security teams using AI-human hybrid models report 60% faster assessments. Continuous AI pentesting now delivers real-time coverage that manual testing can’t match.

This isn’t about man vs. machine—it’s about combining AI’s speed with human expertise. Together, they form the strongest defense against evolving threats.

Attackers are already using AI.

Are you ready to fight back?

#nothingtohide

Senior Security Consultant