0%

Ever feel like testing your LLM is just... guesswork?

You’re not alone—and you’re not wrong. In 2025, generative AI isn’t a novelty. It’s the engine powering products, shaping predictions, and driving billion-dollar strategies. Over 25% of enterprise leaders have already integrated it into their operations. Another 40% are actively planning to. This isn’t a hype cycle—it’s a high-speed AI arms race where hesitation costs market share.

And yet, most companies are still testing these systems like it’s 2019—click around, eyeball a few outputs, and call it “good enough”.

Here’s the brutal truth: unchecked LLMs hallucinate. They mislead. They embed bias and amplify risk—sometimes in customer-facing apps, sometimes in internal decision-making, sometimes in life-or-death situations. GPT-4 is already being used in medical diagnostics. Let that sink in.

The MIT team found that structured, rigorous testing can boost model accuracy by 600%. That’s not a tweak—it’s a transformation.

In 2025, LLM testing isn’t optional. It’s the dividing line between building something trustworthy—or detonating your product, brand, and reputation in one shot.

LLM testing is the process of checking large language models to ensure they produce accurate, reliable, and safe outputs. It’s not just about ticking boxes—it’s about stress-testing a model so it behaves predictably in the real world. Unlike traditional software, LLMs adapt to every input, twist with context, and sometimes hallucinate answers. Bias, toxicity, and errors can appear quietly—and then explode at scale.

In 2025, LLM testing isn’t optional. Here’s why it matters:

Top AI teams combine automated evaluation with human oversight, checking edge cases, ethics, and real-world scenarios. If you’re wondering how to test LLMs effectively, it starts with structured evaluation—automated checks, human review, and continuous feedback loops. They treat testing like a safety net—catching the subtle stuff before it reaches customers. Skip it, and you’re not innovating—you’re gambling with your AI, your product, and your reputation.

Testing LLMs properly means going beyond surface checks—it’s about understanding architecture, data flow, and how models behave under pressure. Testing an LLM isn’t a casual click-and-check. These systems are complex, unpredictable, and often critical to your business. You need structured testing that shows what to test—and why it matters.

Different LLM types—instruction-tuned, retrieval-augmented, or multimodal—need tailored testing methods to expose their unique weaknesses.

Start small. Unit tests focus on individual components and their responses to specific inputs.

Think of it like inspecting ingredients before cooking. Catching issues early prevents larger problems down the line. Applying a robust unit testing technique ensures even the smallest model components behave predictably before integration, reducing debugging time later. Tools like CANDOR can automate this process, generating tests that cover attention heads, embeddings, and more.

LLMs rarely operate in isolation. Integration testing checks that multiple models—or systems interacting with a model—work together seamlessly.

Great soloists don’t guarantee a great symphony. Integration testing ensures harmony across all components, especially when models with different architectures collaborate.

Models evolve. Updates can improve some behaviors but unintentionally break others. Regression testing acts as a tripwire:

Maintain a “golden dataset” of typical and edge-case inputs. Run it with every deployment. Drift is silent but costly—regression testing ensures your AI stays reliable over time.

Together, these three types—unit, integration, and regression—form the foundation of real-world LLM testing. They cover correctness, harmony, and stability. Skip one, and you’re leaving your AI—and your business—exposed.

Tired of guessing whether your LLM is working? Stop relying on gut checks or “feels okay.” Metrics are the only way to see what’s happening under the hood. A metrics-driven approach lets you measure, compare, and improve your model systematically.

These classics still matter:

BLEU: Measures how closely your output matches a reference. Focuses on precision—ideal for translations and exact answers.

ROUGE: Measures recall. ROUGE-N checks overlapping chunks, ROUGE-L identifies longest matching sequences—great for summaries.

METEOR: Goes beyond exact matches, catching synonyms and paraphrases with linguistic knowledge.

No single metric tells the full story. Use all three together to get a complete picture of accuracy.

Think of this as a kind of LLM IQ test—a data-driven way to measure how “smart,” consistent, and adaptable your model really is under real-world conditions.

Accuracy isn’t enough. Your LLM might produce biased or offensive outputs without warning.

Smaller models often struggle more with bias, but even large models need monitoring.

Users won’t wait. If it lags, it fails—so track:

Metrics-driven testing covers accuracy, safety, and speed. Each is critical. Miss one, and your LLM stops being a tool and becomes a liability. Metrics don’t just inform—they protect your users, your model, and your business.

Knowing what to test is one thing. Actually doing it? That’s where the right tools come in. These aren’t academic toys—they’re battle-tested, dev-friendly, and built for 2025-scale AI.

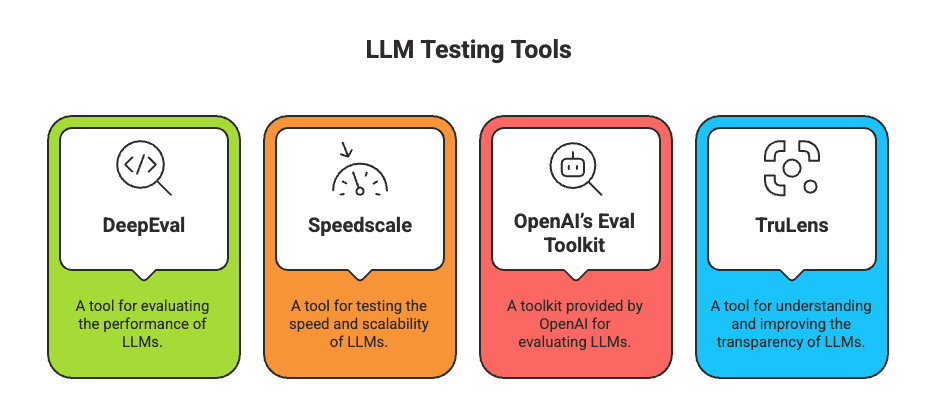

Here are four LLM testing tools worth having in your corner:

LLM Testing Tools

Each of these tackles a different slice of the testing puzzle—so let’s break down what they’re good at, where they shine, and why they matter.

DeepEval turns LLM evaluations into Pytest-style unit tests. DeepEval offers 14+ metrics for RAG, bias, hallucination, and fine-tuning. DeepEval delivers human-readable results and can generate edge-case data for chaos testing. DeepEval is modular, flexible, and production-ready.

Speedscale captures sanitized production traffic and replays it to uncover latency issues, error rates, and bottlenecks. Speedscale simulates real user behavior and adapts to your environment. Speedscale revealed one case where image endpoints slowed to 10s—caught before it hit prod.

OpenAI Eval Toolkit automates prompt testing with prebuilt tasks (QA, logic, code). OpenAI Eval Toolkit uses GPT-4 to grade GPT-3.5 outputs. OpenAI Eval Toolkit integrates cleanly into CI/CD pipelines—ideal for teams building fast, testing smarter.

ART focuses on security testing for prompt injection, model hijacking, and poisoning. ART works across major ML frameworks like TensorFlow and PyTorch. ART scores robustness across multiple data types.

Pick the risk that matters—then match it with the right tool.

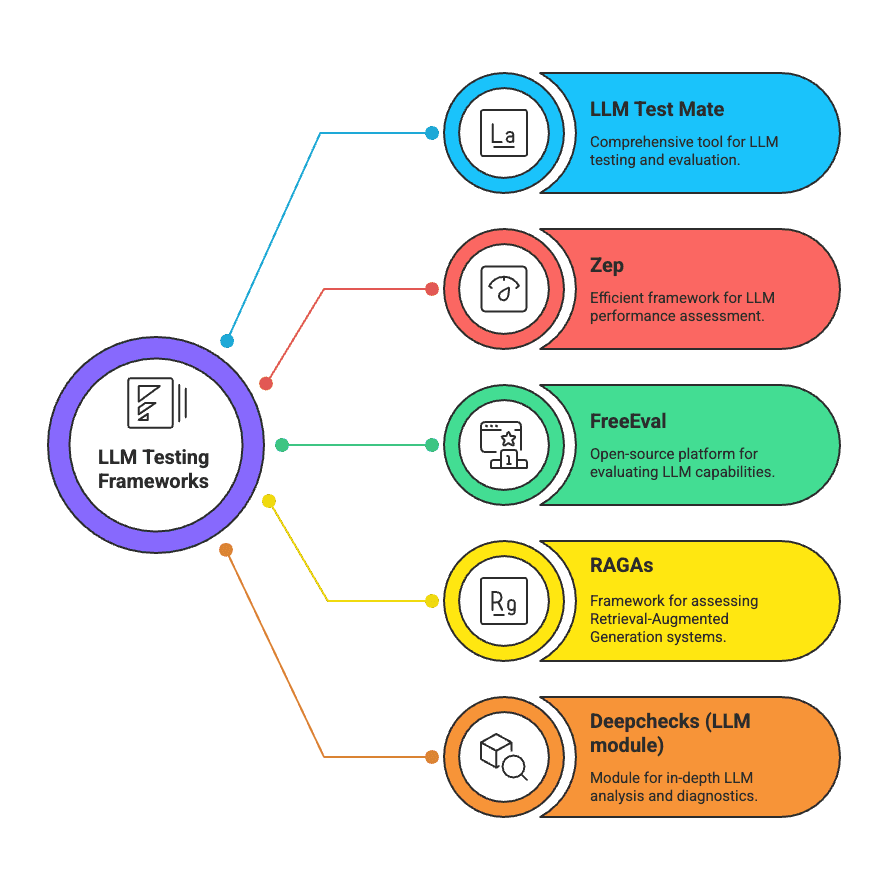

Beyond individual tools, LLM testing frameworks offer structure. They’re designed to help teams evaluate model performance, accuracy, and reliability at scale—with built-in support for continuous validation, bias detection, and semantic checks.

Here are five frameworks that matter in 2025:

Let’s get into each framework and see what makes them stand out:

Purpose-built for LLMs, Test Mate uses semantic similarity and model-based scoring to evaluate generated outputs. It's great for measuring coherence, correctness, and content quality.

Zep focuses on testing LLM-based apps for accuracy, consistency, and cost-effectiveness. It’s especially useful for teams looking to track performance over time and make fine-grained comparisons.

FreeEval goes deep with automated pipelines, meta-evaluation using human labels, and contamination detection. Built for scale, it supports both single-task and multi-model benchmarking.

Tailored for Retrieval-Augmented Generation, RAGAs calculates metrics like contextual relevancy, faithfulness, and precision. Ideal for LLMs that fetch data before responding.

Originally an ML validation library, Deepchecks now supports LLM-specific checks—bias, hallucination, and distribution shifts—plus dashboards that make results easier to act on.

LLM Testing Framework

Each of these frameworks tackles a different layer of the testing stack—some focus on pipeline health, others on prompt output. Together, they help you go beyond “does it work?” to “is it safe, fair, and production-ready?”

Automated or human testing? In 2025, top teams don’t pick one—they combine multiple methods to cover every angle. Scalable evaluation isn’t just about checking outputs—it’s about testing fast, at volume, and under real-world conditions, while catching subtle errors that could harm users, operations, or brand trust.

True testing and evaluation go hand in hand—testing finds what breaks, while evaluation measures how well the model adapts, scales, and learns from its own errors.

One LLM can test another, serving as both evaluator and auditor:

The judge model scores outputs using step-by-step criteria, log-probabilities, or pairwise comparisons. It can handle hundreds of outputs at once and maintain consistency across large datasets, catching subtle issues that traditional metrics miss, like coherence, factuality, and nuanced bias. This approach simulates human reasoning at scale, letting teams focus on critical failures instead of routine errors.

Automation handles routine cases, but some outputs need human judgment:

High-stakes outputs—healthcare, finance, legal—require humans to validate reasoning, evaluate edge cases, and provide qualitative insights that automated metrics alone can’t capture. Humans also catch unexpected ethical or contextual issues that models may overlook.

This method blends user preferences with stress testing:

Behavioral testing exposes weaknesses under unusual conditions. Fine-tuned evaluation models reduce errors by up to 44% compared to few-shot prompts, providing trustworthy insights for high-stakes deployment.

Generic tests aren’t enough:

Experts design prompts and rubrics; LLM judges apply them to deliver repeatable, explainable results, aligned with domain requirements and organizational standards, creating a robust framework for safe, reliable, production-ready LLM deployment.

Testing doesn’t stop at launch. That’s where most teams blow it.

They validate once, deploy—and six months later, the model starts drifting, hallucinating, and hurting trust. Don’t be that team.

“Let’s see how it performs” isn’t a test plan. It’s a gamble.

Clarity kills ambiguity. If it’s not measurable, it doesn’t count.

Most test data is too clean. Real users aren’t.

Perfect tests on perfect data won’t protect you.

Your model degrades—even if you don’t touch it.

Models lose relevance over time. Up to 45% of responses can degrade post-launch without monitoring.

Your best testers are already using the product.

Amazon’s RLAIF boosted scores by 8%—all from structured user feedback.

Treat your post-deployment phase like your pre-launch. It’s not an afterthought—it’s where reliability is made or lost.

Most prompt design is spaghetti testing—toss it, pray it sticks. But in testing, bad prompts don’t just waste time—they give you false confidence. That’s worse than no testing at all. The right prompts to test LLMs can reveal weak spots in reasoning, bias handling, and contextual awareness. Smart teams now maintain libraries of reusable LLM test prompts to benchmark model consistency.

Real users aren’t neat. Your prompts shouldn’t be either.

Experts put it bluntly: AI agents might crush clean labs but choke in the wild. Test for that world.

Break it before it breaks you.

If you’re not attacking your own system, someone else will.

Your org is unique. Your prompts should be too.

Generic prompts = generic failures.

Prompt rot is real.

In 2025, leading teams treat prompts like production code. Versioned. Tested. Audited.

Because if your prompt breaks and you can’t explain why—you’re not testing. You’re guessing.

LLM testing isn’t a nice-to-have. It’s the guardrail between innovation and chaos. With 40% of enterprises baking generative AI into their core strategy—and models still hallucinating or misbehaving 3–10% of the time—testing is survival, not an option.

The playbook is clear. Unit tests cover the basics, making sure individual components behave as expected. Integration tests ensure multiple systems and models work together seamlessly. Regression tests catch silent failures before they reach users. Metrics like BLEU, ROUGE, and METEOR reveal what the model got right, while WEAT and bias benchmarks show where it could break trust. Latency and throughput metrics prove whether it can perform under pressure.

Tools have leveled up too. DeepEval, Speedscale, and OpenAI’s evaluation suite bring production-grade rigor. Hybrid approaches, like LLM-as-a-Judge paired with human review, combine scale with oversight, catching subtle errors while slashing costs.

Testing in 2025 rests on four pillars: clear goals, diverse datasets, continuous monitoring, and real feedback. Skip it, and you’re gambling with your AI, your product, and your reputation.

Testing isn’t optional—it’s the difference between AI that scales and AI that fails.

Building with LLMs? Don’t ship blind. Get precision testing, real-world evaluation, and zero guesswork. Talk to our team and deploy with confidence.

Senior Security Consultant