0%

Large Language Models (LLMs) have revolutionized web applications by making it possible to incorporate sophisticated features such as customer support automation, language translation, SEO optimization, video creation, and content analysis. These AI models are trained on enormous datasets and employ machine learning to comprehend and create human-like text, thus being extremely efficient at providing context-aware and relevant answers.

In the current web ecosystem, LLMs are widely applied for:

In addition to their advantages, LLMs also come with new security threats. Their exposure to sensitive information, APIs, and user inputs exposes them to misuse. Adversaries can use focused attacks to drive malicious model behavior, steal confidential information, or induce adverse effects.

As LLMs are increasingly embedded in business processes, it is important to understand these risks. This guide delves into significant web-based LLM attack types, detection, defense strategies, and best practices for using LLMs in web applications safely and responsibly.

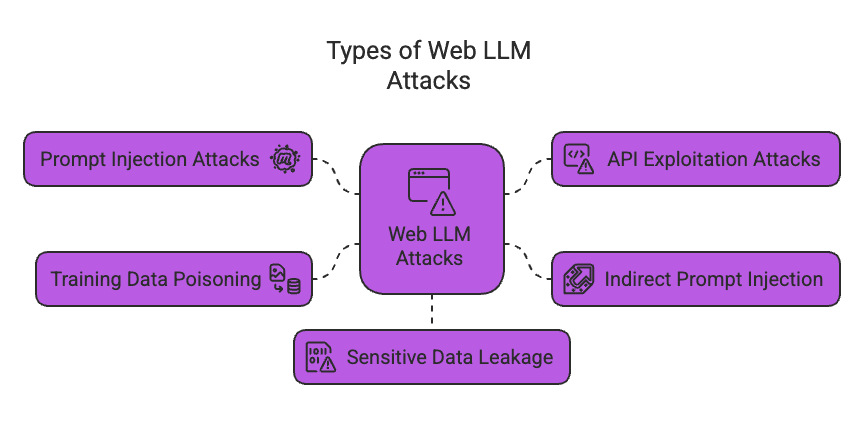

Web LLM Attacks Types.png

As powerful as Large Language Models (LLMs) are, their integration into web applications opens up a range of potential security threats. Web LLM attacks can take many forms, exploiting the model's capabilities and its access to sensitive data and APIs. Understanding these attack vectors is essential for building secure AI-driven systems.

Here are the major types of Web LLM attacks:

Let’s dive deeper into each one:

Prompt injection attacks occur when an attacker crafts specific inputs (prompts) that manipulate the LLM into performing unintended actions or generating unintended responses. Such attacks can lead to the extraction of sensitive information or the execution of unauthorised commands.

An attacker inputs a cleverly designed prompt that tricks the LLM into revealing confidential user data.

A prompt is crafted to manipulate the LLM into making unauthorised API calls, resulting in data breaches or system malfunctions.

Prompt injection attacks can compromise the confidentiality, integrity, and availability of web applications, leading to data leaks, unauthorised actions, and potential system downtime.

API exploitation attacks leverage the LLM's access to various APIs, manipulating the model to interact with or control APIs in unintended ways. This can result in malicious actions being performed through the exploited APIs.

An attacker uses the LLM to send harmful requests to an API, such as executing a SQL injection attack.

The LLM is manipulated to interact with an API in a way that bypasses security controls, resulting in unauthorised data access.

These attacks can lead to data breaches, unauthorised transactions, and exploitation of other vulnerabilities within the web application’s infrastructure.

Indirect prompt injection attacks involve embedding the malicious prompt in a medium that the LLM will process later, such as documents or other data sources. This indirect approach can cause the LLM to execute unintended commands when it processes the embedded prompts.

A harmful prompt is hidden within a document that is later processed by the LLM, causing it to perform unauthorized actions.

An email containing a malicious prompt tricking the LLM into creating harmful rules or actions.

Indirect prompt injections can lead to unauthorised actions being executed without direct input from the attacker, complicating detection and mitigation efforts.

Training data poisoning involves tampering with the data used to train the LLM, leading to biased or harmful outputs. Attackers can insert malicious data into the training set, causing the LLM to learn and replicate these harmful patterns.

Training the LLM with biased data, resulting in discriminatory or unethical outputs.

Inserting incorrect information into the training data, causing the LLM to produce misleading responses.

Training data poisoning can degrade the quality and reliability of the LLM’s responses, leading to reputational damage and loss of user trust.

Sensitive data leakage exploits LLMs to reveal confidential information they have been trained on or have access to. Attackers craft specific prompts that coax the LLM into divulging information that should remain confidential.

Sensitive data leakage can result in significant privacy breaches, legal ramifications, and loss of customer trust.

Direct inputs refer to the prompts or questions directly fed into the LLM by users. These are the most immediate and apparent points of interaction with the model.

Indirect inputs include the training data and other background information the LLM has been exposed to. These inputs are not directly controlled by users but significantly influence the model’s behaviour and responses.

It’s crucial to determine what kind of data the LLM can reach, including customer information, confidential data, and other sensitive information.

Identifying the APIs the LLM can interact with, including internal and third-party APIs, helps map the potential attack surface.

Conduct various tests to see if the LLM can be tricked into producing inappropriate responses or actions through crafted prompts.

Examine if the LLM can be used to misuse APIs, such as unauthorised data retrieval or triggering unintended actions.

Test if the LLM can be prompted to reveal sensitive or private data it shouldn’t disclose.

Related Read – Understanding the Types of Penetration Testing Services

These case studies explore various attack scenarios involving web LLMs (Large Language Models). For each case study, we'll review the scenario description and attack vector analysis.

A malicious user discovers a vulnerability in the prompt handling of a customer service chatbot powered by an LLM. They craft a specific prompt that tricks the LLM into revealing confidential customer information, such as credit card details or account passwords.

This is an example of prompt injection. The attacker exploits flaws in how the web application processes user input and injects malicious code within the prompt. The LLM, unaware of the manipulation, processes the prompt and generates a response containing sensitive data.

An attacker targets a news website that uses LLM for real-time translation. They inject a manipulative prompt that alters the original article's meaning to spread misinformation or propaganda. The translated version presents a distorted narrative, potentially influencing readers.

This scenario exploits the LLM's dependence on context and prompts. The attacker injects a prompt subtly altering the translation instructions, pushing the LLM to generate biased or false content.

An SEO specialist exploits an LLM used by a search engine to generate meta descriptions for websites. They manipulate the LLM with specific prompts to inflate the website's ranking in search results, even if the website content is irrelevant or low quality.

This scenario goes beyond manipulating search engine algorithms. The attacker targets the LLM responsible for generating meta descriptions, feeding prompts that prioritise keywords over actual content relevance.

A social media platform uses LLM to analyse and categorise content. Attackers exploit vulnerabilities in the LLM to bypass content moderation filters. They can upload malicious content disguised as harmless posts, allowing them to spread hate speech, malware, or other harmful content.

This scenario highlights the vulnerability of AI models to adversarial examples. Attackers might craft content that appears innocuous to humans but triggers specific responses within the LLM, bypassing content moderation filters.

A group of activists targets a platform using an LLM to moderate user-generated content. They submit a massive amount of manipulated data with specific biases. This data influences the LLM's decision-making, leading it to censor legitimate content while allowing hateful or offensive content to remain.

This scenario explores data poisoning. Attackers intentionally manipulate the training data fed to the LLM, introducing biases that skew its content moderation decisions.

As large language models (LLMs) become increasingly integrated into various applications, it's critical to establish strong defence mechanisms to safeguard against potential attacks. The following strategies provide a comprehensive approach to securing LLM interactions, focusing on treating APIs as publicly accessible, limiting sensitive data exposure, and implementing robust prompt-based controls.

Authentication and Authorisation: Ensure that every API endpoint interacting with the LLM requires robust authentication mechanisms, such as OAuth, API keys, or JWT (JSON Web Tokens). Role-based access control (RBAC) should be used to grant access permissions based on the user's role, ensuring minimal access required for the task.

Rate Limiting and Throttling: Implement rate limiting to control the number of requests a user or application can make to the API within a given timeframe. This feature prevents abuse and potential denial-of-service (DoS) attacks.

IP Whitelisting and Blacklisting: Restrict access to the API by allowing only known and trusted IP addresses (whitelisting) or blocking specific IPs (blacklisting) that exhibit malicious behavior.

HTTPS/TLS Encryption: Always use HTTPS to encrypt data in transit, protecting it from interception and man-in-the-middle attacks.

Regular Security Audits: Conduct regular security audits and vulnerability assessments of your API infrastructure. Employ tools like OWASP ZAP or Burp Suite to identify and mitigate security vulnerabilities.

Patch Management: Keep all API-related software and dependencies up-to-date with the latest security patches to protect against known vulnerabilities.

Data Minimization: Only provide the LLM with the data necessary for its tasks. Avoid exposing it to unnecessary sensitive or confidential information.

Data Anonymisation: Where possible, anonymise data before processing it through the LLM. Remove or mask personally identifiable information (PII) and other sensitive details.

Pre-processing Data: Implement data pre-processing steps to sanitize training data, removing any sensitive information. Use tools and scripts to scan and redact confidential data automatically.

Data Filtering: Use data filtering mechanisms to ensure that the LLM's responses do not inadvertently leak sensitive information. This can include keyword filtering or more advanced content analysis techniques.

Awareness of Prompt Injection: Be aware that prompt injection attacks can manipulate the LLM's output by carefully crafted input prompts. This requires continuous vigilance and countermeasures.

Layered Security: Do not rely solely on prompt instructions for security. Implement multiple layers of security, including input validation, output filtering, and anomaly detection.

Dynamic Prompts: Regularly update prompt configurations to address new vulnerabilities and adapt to evolving attack methods. This involves staying informed about the latest research and best practices in LLM security.

Prompt Testing: Continuously test prompts under various scenarios to ensure they perform as expected. Use adversarial testing to simulate potential attacks and evaluate the robustness of your prompt-based controls.

As the integration of Large Language Models (LLMs) into web applications becomes increasingly widespread, the importance of robust security measures cannot be overstated. Web LLM attacks, such as prompt injection and API exploitation, data poisoning, and leakage, are serious threats to user data, application integrity, and organisational trust. Understanding the diverse forms of these attacks is the first step toward building effective defences. By identifying input sources, probing vulnerabilities, and securing access points like APIs and sensitive data, organisations can significantly reduce their risk exposure.

The existence of multi-layered security controls—such as rigorous access control, data reduction, secure API practices, and regular prompt testing is the assurance that LLMs are treated securely and ethically. Maintaining a continuous loop of periodic checks, updates, and tests to stay ahead of sophisticated threats is equally critical. Through careful planning and vigilant monitoring, businesses can realise the potential of LLMs while being capable of sustaining a strong security posture and protecting user trust and operational stability.

Head of Security testing