0%

Ever wondered why AI is suddenly everywhere—from tech blogs to government hearings? It’s because AI isn’t just a flashy tool anymore; it’s shaping real-world decisions, from hiring and lending to who gets visibility online.

Regulators are paying attention. Mentions of AI in laws jumped 21.3% across 75 countries in 2025 alone—a ninefold increase since 2016.

Why the urgency? AI isn’t perfect. Algorithmic bias can deny loans based on zip codes, not credit scores. Generative AI can fabricate videos or misuse personal data. Safety is another concern when AI recommendations affect real lives.

The public is aware: a 2023 poll found 76% of Americans want federal AI regulation, with both Republicans and Democrats in surprising agreement.

Whether it’s fairness, privacy, or accountability, AI is no longer a distant innovation—it’s here. And the question isn’t if regulation will arrive, but whether you’ll be ready when it does.

Regulatory AI is about setting the rules for how artificial intelligence operates in the real world. These are the regulations for AI that governments and institutions are increasingly codifying. Governments are stepping in because AI decisions have real consequences—sometimes unfair, unsafe, or illegal. Algorithmic bias, privacy violations, and opaque decision-making are no longer hypothetical; they’re daily risks.

The goal of AI regulation is simple: make these systems accountable. Transparency ensures people understand how AI affects them. Accountability assigns responsibility when things go wrong. Fairness prevents discrimination baked into algorithms. Privacy safeguards personal data. Human oversight keeps critical decisions from being fully automated.

Frameworks like the EU AI Act or the NIST AI Risk Management Framework are early attempts to categorize AI by risk—from banned systems to low-risk tools requiring minimal oversight. The key takeaway: regulation isn’t about halting innovation; it’s about creating a safety net.

Businesses that understand these principles can innovate responsibly while staying ahead of regulatory pressures.

Financial regulators aren’t waiting for perfect AI laws—they’re using AI to monitor AI. This approach is part of the broader regulation of artificial intelligence technologies, leveraging existing laws and risk-based examinations rather than creating rules from scratch. Think of it like using a speed limit to catch both cars and motorcycles.

Banks and financial institutions are investing heavily in AI-powered compliance. A 2018 study found 70% of risk and tech professionals were already using AI for compliance—five years ago. Today, AI watches everything in real time, from traders to customers, cutting through false alarms and spotting patterns humans might miss, like money laundering or market manipulation. The shift is clear: from rigid “if this, then that” rules to predictive systems that actually understand what they’re monitoring.

Regulatory AI agents handle mountains of regulatory documents so humans don’t have to. They extract key data, create actionable alerts, and even compare regulations across countries. They translate legal jargon into plain language, tailored to your business, with citations for every answer. Pharmaceutical companies, for example, have processed over 65,000 compliance documents across multiple markets.

Generative AI is replacing static policy binders with dynamic, interactive systems. Employees ask natural-language questions and get instant guidance. AI automatically updates access controls, flags gaps between procedures and regulations, and catches inconsistencies before violations occur. Humans still make judgment calls, but AI handles the heavy lifting—turning compliance from a guessing game into a manageable, automated process.

Building effective AI rules isn’t rocket science. But it does require getting a few fundamentals right. Most countries are winging it, tossing together random policies and hoping for the best. The smart ones? They follow a blueprint that actually works.

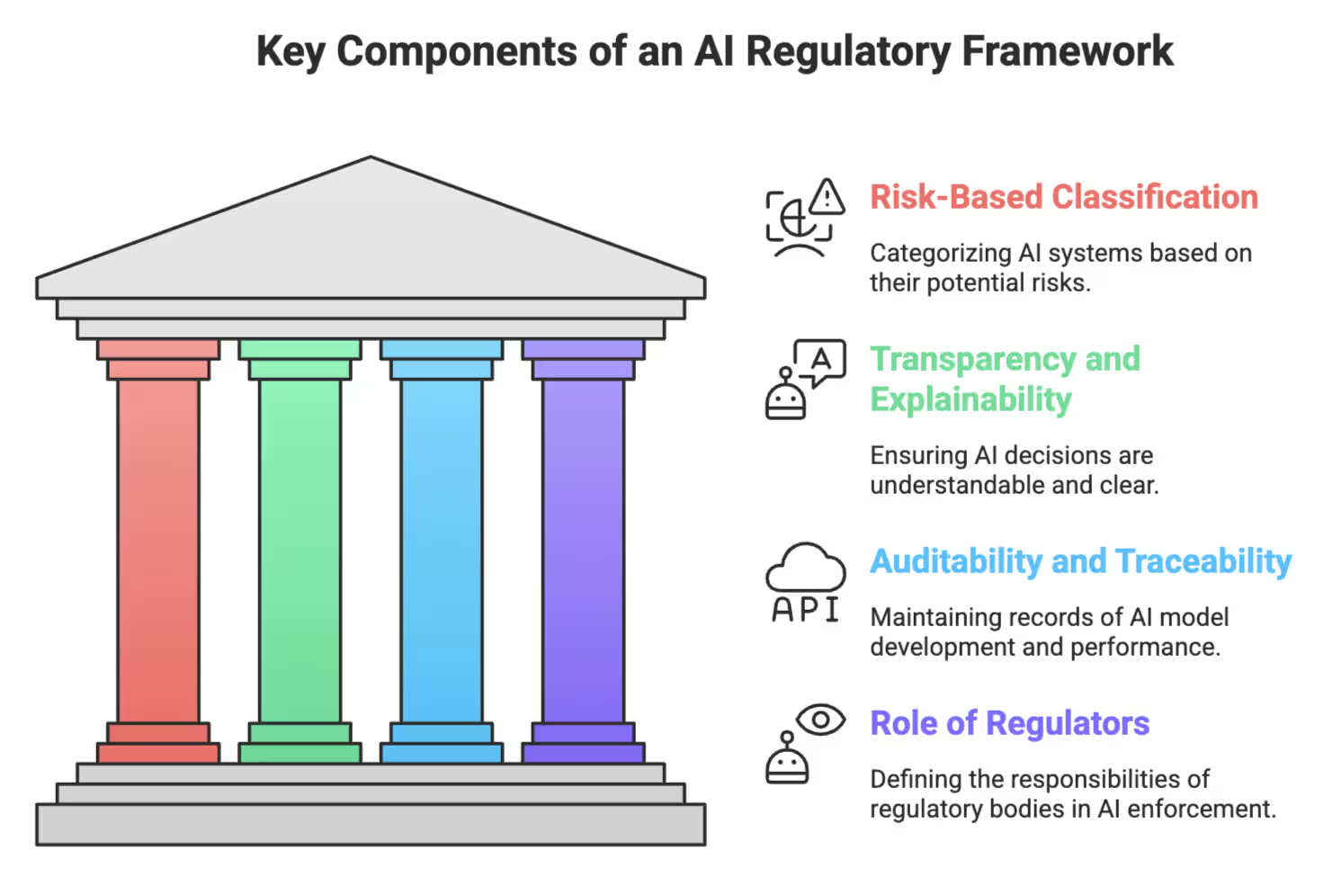

These are the core elements of a strong AI regulatory framework:

Key Components of AI Regulatory Framework

Let’s get into each of these and see how they shape responsible AI governance.

Not all AI is created equal—treating every system the same is a common mistake.

The EU’s tiered approach shows the math: 18% of AI is high-risk, 42% low-risk, and 40% falls into regulatory limbo—confusion that slows innovation.

Transparency isn’t just a buzzword; it’s measurable and actionable.

Developers must provide “instructions for use,” highlighting limitations, potential failures, and operational guidance.

Permanent audit trails are essential—your AI needs a passport.

Integrated from the start, auditability ensures AI is transparent, accountable, and compliant by design.

Regulations without enforcement are just suggestions.

Effective AI governance balances oversight with practicality, targeting risk where it matters most. Specific, measurable, enforceable rules make regulation real—everything else is just bureaucratic theater.

Tech companies love showcasing shiny AI demos. What they don’t show are the messy, complicated, and sometimes harmful issues behind these systems. These aren’t hypothetical—they affect real people, right now.

Algorithmic bias is simple in concept but devastating in practice. AI systems learn prejudice from historical data, producing unequal outcomes. African American defendants have faced higher risks of being wrongly labeled as likely to reoffend. AI mortgage systems have charged minority borrowers more for identical loans. Even major tech companies, like Amazon, had to scrap recruiting tools that systematically discriminated against women. The EU enforces strict rules, with fines up to €35 million or 7% of global revenue for prohibited practices.

AI depends on massive datasets, often without explicit consent. Individuals have discovered personal medical photos in training datasets without permission. Ownership of AI-generated content is murky: does it belong to the creator, the user, or the company? Courts are already reviewing cases where AI outputs closely mimic copyrighted works. AI’s appetite for data increases the risk of privacy violations, and information that was once anonymous can now be re-identified.

The “responsibility gap” is a major challenge. When AI decisions cause harm, it’s unclear who is accountable—the programmer, deployer, company, or manager. Traditional accountability frameworks assume predictable human behavior, but AI operates differently, with multiple actors and opaque processes. Diffused responsibility creates ethical and legal dilemmas. Solving these problems requires not just better technology but rethinking how organizations assign responsibility when machines increasingly make decisions that affect human lives.

The global AI regulatory landscape is fragmented—and that’s a problem. This fragmentation fuels the ongoing AI regulation debate among policymakers and industry leaders worldwide. By 2023, only 32% of countries had AI-specific regulations. The rest are still figuring it out. Even among those with rules, there’s little agreement on what good governance actually looks like, creating a patchwork that slows innovation.

The EU became the first region to introduce a comprehensive AI law, categorizing AI systems by risk.

The phased rollout (2025–2027) balances innovation with safety, sending a clear signal: AI can advance responsibly if properly governed.

The US follows a patchwork approach, relying on federal guidance complemented by state legislation.

With widely varying state rules, companies face complex compliance challenges when operating across the country.

India is preparing regulations while learning from global experiences. The regulation of artificial intelligence in India is still evolving, with initial focus on high-risk sectors.

These developments are shaping the artificial intelligence laws in India, providing a foundation for future comprehensive rules. This cautious approach allows India to avoid early missteps while encouraging sector-specific innovation.

China enforces strict public-facing AI rules but lenient enterprise and defense policies.

The goal: dominate AI by 2030 while controlling citizen-facing systems.

Fragmented regulations are slowing global AI development. Systems compliant in one region may violate laws elsewhere, forcing companies to spend more time on compliance than innovation. AI keeps advancing, raising the question: will global standards emerge before technology outpaces regulation?

AI regulation isn’t just approaching—it’s accelerating. By the end of 2024, over 70 countries had published or drafted AI-specific rules. This isn’t a slow trend; it’s a global push reshaping how AI will be governed in the coming years. Organizations need to prepare for overlapping frameworks, cross-border scrutiny, and evolving standards.

International summits are moving from photo ops to practical action.

Global coordination is becoming tangible, with countries building actionable systems rather than vague promises.

Cross-border compliance remains a major challenge for organizations.

Companies are realizing that embedding compliance from day one is critical for global AI operations.

The EU continues to lead in regulating general-purpose AI systems.

Real-time development of these frameworks shows that AI regulation is evolving alongside technology, and companies must adapt proactively.

AI regulation isn’t a future problem—it’s happening now. The global race, messy rollouts, and ethical dilemmas make one thing clear: this isn’t about ticking boxes. It’s about building AI responsibly from day one.

The EU’s tiered approach is smart: only 18% of AI systems face heavy oversight, while most operate under lighter rules. China takes a different tack—strict rules for public-facing AI, lenient for enterprise and research. Both show that context matters when regulating innovation.

The real challenge is navigating the global patchwork. What’s legal in California might break Brussels laws. Companies are learning that compliance can’t be bolted on after the fact. Risk-aware design and governance must be integrated into AI from the start.

Regulation may be imperfect, but it’s necessary. Ethical concerns—bias, accountability gaps, privacy—require human judgment, not code. Organizations that embed responsible AI now will thrive. Those that don’t will learn the cost the hard way. The choice is yours.

Take control of AI compliance, reduce regulatory risk, and build trust in your AI initiatives with UprootSecurity — where GRC becomes the foundation of responsible and scalable AI governance.

→ Book a demo today

Senior Security Consultant